Fairness for Image Generation with Uncertain Sensitive Attributes

|

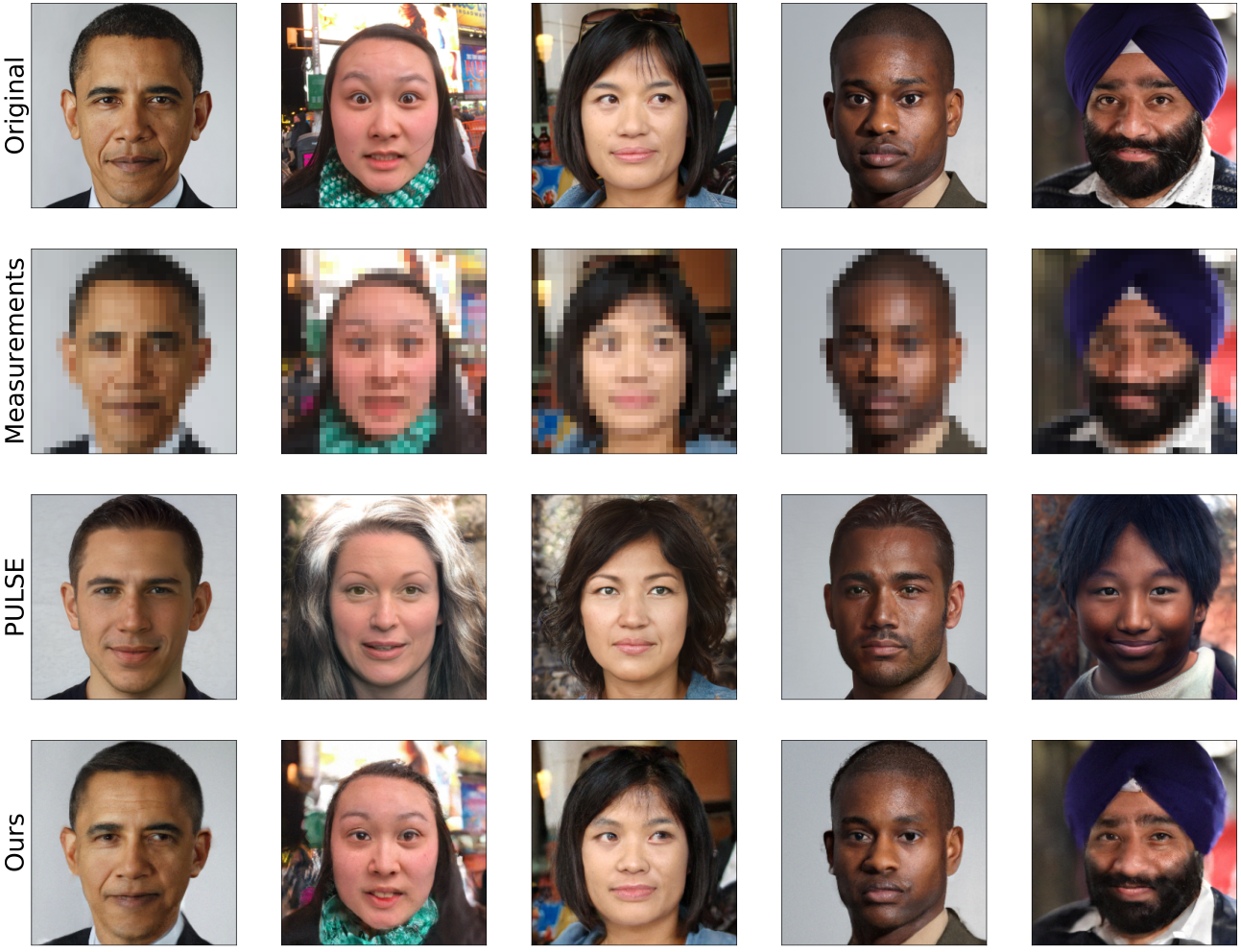

PULSE/ MAP Estimation suffers from majority bias, |

Abstract

This work tackles the issue of fairness in the context of generative procedures, such as image super-resolution, which entail different definitions from the standard classification setting. Moreover, while traditional group fairness definitions are typically defined with respect to specified protected groups – camouflaging the fact that these groupings are artificial and carry historical and political motivations – we emphasize that there are no ground truth identities. For instance, should South and East Asians be viewed as a single group or separate groups? Should we consider one race as a whole or further split by gender? Choosing which groups are valid and who belongs in them is an impossible dilemma and being ‘‘fair’’ with respect to Asians may require being ‘‘unfair’’ with respect to South Asians. This motivates the introduction of definitions that allow algorithms to be oblivious to the relevant groupings.

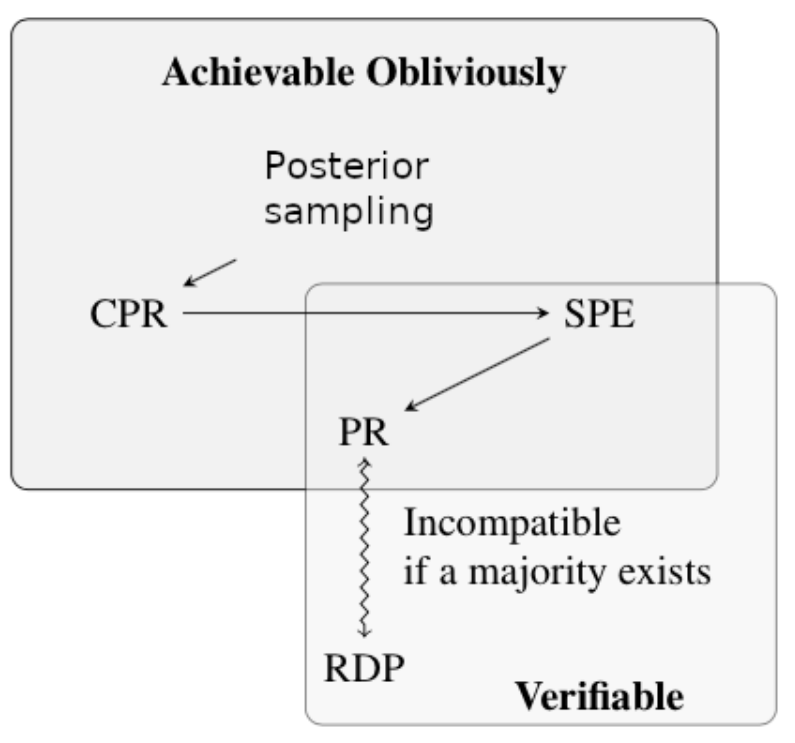

We define several intuitive notions of group fairness and study their incompatibilities and trade-offs. We show that the natural extension of demographic parity is strongly dependent on the grouping, and impossible to achieve obliviously. On the other hand, the conceptually new definition we introduce, Conditional Proportional Representation, can be achieved obliviously through Posterior Sampling. Our experiments validate our theoretical results and achieve fair image reconstruction using state-of-the-art generative models.

Paper and Video

This is the arXiv link to our paper.

This is the link to our ICML video.

Project Description

Our earlier work, Compressed Sensing using Generative Models (Bora, Jalal, Dimakis, Price, ICML 2017), showed that generative models can be used to solve various inverse problems such as inpainting, super-resolution, compressed sensing, etc. Using the distribution defined by a generative model, we showed that the MAP estimate provides good reconstructions. The PULSE super-resolution algorithm improves our work, and shows state of the art performance for super-resolution. However, as our work in 2017 essentially used MAP estimation, and PULSE is an improvement of our method, it is prone to majority bias when dealing with minorities, as evidenced in the top image, where a low-resolution image of Barack Obama is reconstructed as a white man.

The above example leads to two fundamental questions:

Does this occur due to the bias in the dataset used to train the generative model? Or is there a fundamental problem in the PULSE super-resolution algorithm?

The above example is ‘‘unfair’’ in some sense – but what does it mean for this generative process to be fair?

Results

|

Algorithmic ResultsOur results on the FlickrFaces and AnimalFaces dataset shows that MAP suffers from majority bias. However, Posterior Sampling avoids the majority bias, and preserves the diversity in the population. New Fairness DefinitionsWe introduce new definitions of fairness in the context of image generation, and study their feasibilities, incompatibilities, and implications. We also show that Posterior Sampling satisfies many of our fairness definitions. Oblivious AlgorithmsTypical definitions of fairness require a partition of the population, so that we can define protected groups and ensure that algorithms are fair with respect to these protected groups. However, in order to define these protected groups, we need information about sensitive attributes, such as race, gender, etc. Since these attributes are ambiguous, defining a good partition of the population is extremely difficult, if not impossible. We show that even in the absence of information about protected groups, Posterior Sampling satisfies some fairness guarantees. Furthermore, if we do have access to explicit information about the protected groups, we can modify Posterior Sampling to be fair with respect to these groups. |