Instance-Optimal Compressed Sensing via Posterior Sampling

Abstract

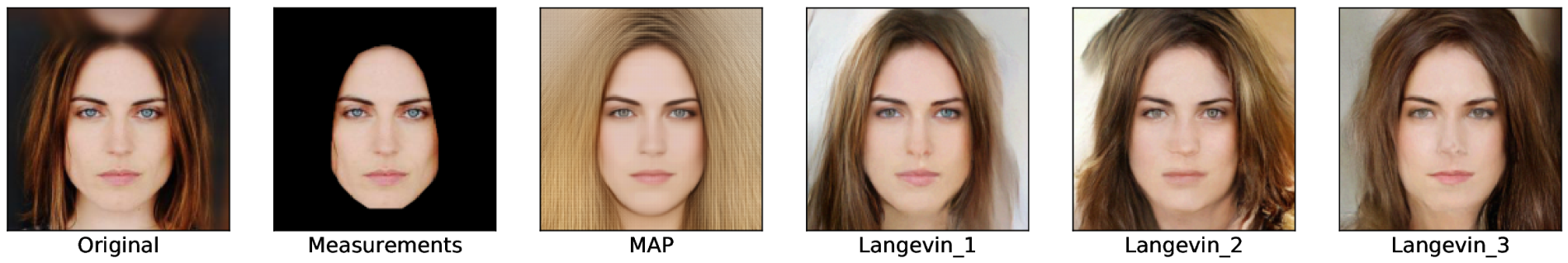

We characterize the measurement complexity of compressed sensing of signals drawn from a known prior distribution, even when the support of the prior is the entire space (rather than, say, sparse vectors). We show for Gaussian measurements and any prior distribution on the signal, that the posterior sampling estimator achieves near-optimal recovery guarantees. Moreover, this result is robust to model mismatch, as long as the distribution estimate (e.g., from an invertible generative model) is close to the true distribution in Wasserstein distance. We implement the posterior sampling estimator for deep generative priors using Langevin dynamics, and empirically find that it produces accurate estimates with more diversity than MAP.

Paper and Video

This is the arXiv link to our paper.

This is the link to our ICML video.

Project Description

Our earlier work, Compressed Sensing using Generative Models (Bora, Jalal, Dimakis, Price, ICML 2017), showed that generative models can be used to solve various inverse problems such as inpainting, super-resolution, compressed sensing, etc. Since many generative models produce images supported on a low-dimensional manifold, we showed that the number of measurements needed to compress and recover an image can be much, much smaller than the number of pixels in the image.

However, recent state-of-the-art generative models such as invertible models and score-based generative models do not satisfy the assumptions made in our earlier work, and existing theory does not satisfactorily explain why they can be used in compressed sensing. For general probability distributions not supported on low-dimensional manifolds, how do we characterize the compressibility of the distribution?

Furthermore, our earlier work used the MAP estimate as the reconstruction. Can MAP be used for general probability distributions / generative models? Or do we need a different algorithm?

Results

Algorithmic Results

We propose Posterior Sampling using generative models, where the reconstruction is sampled from the posterior distribution of the generative model conditioned on the measurements. We further observe that MAP estimation is prone to averaging effects, whereas Posterior Sampling produces natural-looking images with diversity.

New Complexity Definition

We propose the approximate covering number of a distribution, and we show that this new complexity almost-exactly characterizes the compressibility of general distributions.

Upper Bound & Robustness

We show that the approximate covering number upper bounds the number of measurements required by Posterior Sampling to achieve good reconstruction quality.

Furthermore, Posterior Sampling is robust to distribution mismatch. Even if the distribution of the generative model we use does not match the ground-truth distribution, Posterior Sampling will produce good reconstructions as long as the distributions are close in Wasserstein distance.

Lower Bound

We additionally show that for any distribution over the ground-truth, any algorithm that achieves good reconstructions requires measurements lower bounded by the approximate covering number of the ground-truth distribution.

Instance Optimality

Typical lower bounds in compressed sensing consider specific worst-case distributions. A typical example is the uniform distribution on a well-separated sparse code. On the other hand, our lower bound applies to any distribution, without any assumptions on the probability distribution. Hence our results are per-instance, rather than worst-case.

Using our upper and lower bounds, we can conclude that Posterior Sampling is an almost-optimal algorithm for any probability distribution.